- October 22, 2025

It’s common knowledge by now that both manual and automated testing have key roles in any software testing project. Automation repeats yesterday’s truths, while manual testing uncovers new surprises.

Automation is the autopilot handling stable tests at scale. Manual testing manages turbulence, edge cases, and instances where “something feels off.”

In a QA context, the question is less “manual testing vs automation testing?” and more “manual testing where? and automation testing where?” This guide shows exactly where each approach thrives, how to document them well, and how modern low-/no-code tools can turn proven manual steps into reliable automation steps.

Table of Contents

The Short Answer to Manual Testing vs Automation Testing?

Manual and automated tests are not opposites; they are co-dependents.

Teams intending to scale product quality and sanity must combine the two approaches. Manual testing provides human judgment, especially required for exploratory procedures and deciding next steps for ambiguous test cases.

Automation is for repeatability, speed, and breadth. It is ideal for regression checks, data permutations, and cross-env checks.

Manual Testing

Ideal for when:

- You need human judgment.

- You need rapid resolution of ambiguous use cases.

- You need to capture minute UX friction points and visual nuance.

- Each feature is in its early stages, when requirements shift and automated checks are too unstable.

Limitations:

- Slower execution.

- Prone to human error.

- Cannot occur 24/7, unless reviewers work in shifts.

- Hard to scale without hiring new employees.

Automation Testing

Ideal for when:

- The test pipeline needs repeatability, speed, and breadth.

- Specs are stable and scenarios are high-frequency or critical (auth, payments, core APIs).

Limitations:

- Poor at judging UX.

- Requires technical skillsets for designing automated test pipelines, scripting tests, and debugging.

- Requires extensive infrastructural changes unless you pick the right tool for your stack and use cases.

- Requires expensive setup and maintenance, especially for testing UI flakiness.

Manual vs. Automation: Choosing the Right Approach for the Right Work

When does manual testing work best?

Manual testing shines when tests need human judgment, flexible thinking on edge cases, and feedback on the UX.

Exploratory Testing: Testers review workflows, edge inputs, and how the app “feels” to an actual user. Bugs include UX friction, accessibility gaps, and subtle context issues that scripted tests cannot find.

New, Rapidly Evolving Features: Manual testing is ideal where a feature is in nascent stages; requirements shift daily, and hence all scripts churn. Testers validate assumptions, refine acceptance criteria, and produce reusable steps that can later be converted into automated checks in later stages.

Usability and Visual Nuance: Humans are needed to scan for microcopy clarity, visual balance, and user flows.

Low-Frequency or One-Off Scenarios: It’s easier to manually check scenarios likely to occur infrequently: year-end jobs, rare admin flows, etc.

Where does automation testing work best?

Automation works best for any test area that prioritizes stability and repetition.

Regression Suites: Automation is ideal for building fast, deterministic runs with meaningful assertions and failure artifacts (logs, traces, screenshots).

Data & Environment Permutations: Automation is needed to build repeatable tests that check browsers, devices, locales, roles, feature flags, and payload matrices.

Performance, Load, & API Contracts: Automation helps testers encode SLAs and schemas as executable checks. Configure smoke checks on each merge and heavier test loads to run at night.

Security Gates & Static Checks: It is best to automate SAST/DAST, dependency audits, secret scanning, and policy-as-code.

Happy-Path & Critical Flows: Automation is great for verifying efficacy of payments, auth, and other P0. It keeps tests resilient (stable locators, proper waits).

How to Document Manual Test Cases vs. Automated Test Cases

Accurate, protocol-based documentation makes tests discoverable, traceable, and auditable. Be it for manual or automated tests, ensure that documentation is clear (anyone can run it), traceable (linked to requirements/risk), deterministic (clear pass/fail), and observability (evidence captured).

Manual Test Cases: What to Capture

- ID & Title: unique identifier for search and reporting.

- Objective: what the test is meant to achieve.

- Preconditions & Data: environment, flags, accounts, seed data.

- Steps: small, numbered, one task per step.

- Expected Results: what the result should be.

- Actual Results: what the result actually is.

- Evidence: screenshots/logs/video when needed for bug resolution.

- Ownership: requirement ID, links to defects, owner, tags for traceability.

Manual Test Case: Example

ID: M-CHK-014

Title: Guest checkout applies valid % discount code

Objective: Ensure promo logic correctly reduces order total for guest users.

Preconditions:

- Env: staging

- Feature flag: promos_v2=ON

- Data: Product SKU-TEE-XL price $1,000 in stock; promo SAVE10 = 10% off, min cart $500

- Dependencies: Payments sandbox reachable

Steps & Expected Results:

- Add SKU-TEE-XL to cart → Cart shows $1,000 subtotal

- Proceed to checkout as Guest → Guest checkout form appears

- Enter shipping details (valid) → “Continue to payment” enabled

- Apply promo code SAVE10 → Discount line shows −$100; total $900

- Complete payment with test card 4111…1111 → Order confirmation page; status “PAID”

Evidence: Screenshot of Order Confirmation, payment logs

Automated Test Cases: What to Capture

Note: Low-code and no-code testing have quickly gained ground in software testing. No surprises there, as these technologies expand the scope of testing. Stakeholders without coding and technical expertise can build tests by delivering instructions in natural language. Hence, this piece will focus on codeless tests in automation contexts.

- Case Mapping: link to manual case/requirement (caseId, reqId).

- Setup/Fixtures: environment, data builders, auth.

- Deterministic Steps: resilient locators, explicit waits.

- Assertions: specific business outcomes.

- Evidence on Failure: screenshot, console, network trace.

- Execution Metadata: tags, owner, CI job.

Example: Test Case Metadata (Codeless & Excel- First)

Case Id: M-CHK-014

Req Id: REQ-PROMO-22

Owner: Priya.N

Module: Checkout

Feature: Discounts

Sub Feature: Percent

Scenario: Guest checkout applies 10% promo code

Criticality: P0

Tags: automated, checkout, p0, ui, regression

Preconditions: Staging URL available; product “Tee XL” in catalog; payment sandbox enabled

Test Objective: Apply SAVE10 at checkout and verify final total

Expected Result: Discount line shows -$100; total shows $900

Example: Codeless Test (Codeless & Excel- First)

| Step | Keyword / Action | Test Object | Locator Ref (UI Properties) | Test Data | Expected Result | Timeout | Screenshot | Comments |

|---|---|---|---|---|---|---|---|---|

| 1 | Navigate to URL | Base URL | base.url | — | Homepage is displayed | 10s | on-fail | Use ENV var if defined |

| 2 | Click link | Product: “Tee XL” | product.link.teeXL | — | PDP for “Tee XL” is visible | 10s | on-fail | — |

| 3 | Click button | Add to Cart | btn.addToCart | — | Cart count increments | 10s | on-fail | — |

| 4 | Click link | Checkout | link.checkout | — | Checkout page (Guest) heading is visible | 10s | on-fail | Validate “Guest Checkout” heading |

| 5 | Input text | Promo Code field | input.promoCode | SAVE10 | — | 10s | on-fail | — |

| 6 | Click button | Apply promo | btn.applyPromo | — | Discount line appears | 10s | on-fail | — |

| 7 | Assert text equals | Discount line | label.discountLine | -₹100 | Shows -$100 | 10s | always | Precise assertion |

| 8 | Assert text equals | Order total | label.orderTotal | ₹900 | Shows $900 | 10s | always | Precise assertion |

| 9 | Input text | Card Number | input.cardNumber | 4111111111111111 | — | 10s | on-fail | Sandbox number |

| 10 | Input text | Expiry | input.cardExpiry | 12/30 | — | 10s | on-fail | — |

| 11 | Input text | CVC | input.cardCvc | 123 | — | 10s | on-fail | — |

| 12 | Click button | Pay Now | btn.payNow | — | Order confirmation heading is visible | 20s | on-fail | — |

| 13 | Assert visible | Order Confirmed H1 | h1.orderConfirmed | — | “Order confirmed” is visible | 10s | always | Final assertion |

TestWheel simplifies automated testing by eliminating the need for coding. A few simple clicks and uploads, and you’re good to go.

Benefits of Structured Documentation for both Manual and Automated Testing

No matter the approach, structured docs keep test pipelines trackable, explainable, and reusable. It is non-negotiable in modern test flows.

- Traceability: Questions like “What proves REQ-123 works?” can be answered in seconds. Simply link case ↔ requirement ↔ script ↔ build.

- Smarter prioritization: Hotfix in the pipeline? Just pull the P0 smoke test result with tags or test IDs.

- Less duplication, fewer gaps: Overlaps can be avoided since test IDs and other identifiers are made unique and searchable.

- Faster onboarding: It’s easier to onboard new team members if they can just read a test case and get all the information they need.

- Informative reports: Reports automatically include test details, evidence, and the gap between expected vs actual results. This simplifies debuggability.

- Easier to automate: Comprehensive documentation of manual test cases actually helps with setting up automation pipelines. A tool like TestWheel can convert detailed manual test cases into executable automation steps.

Build a Single Source of Truth for Test Management

The right test management tool will become a source of truth for what a test needs to run and what it proves. At a high level, a tool like TestWheel will let you capture:

- Objective: The risk you’re reducing or the behavior you’re proving.

- Expected results: Clear pass/fail outcomes. Ideally, one expected result per step.

- Dependencies (what the test needs to run): Data, accounts, feature flags, environments, third-party services.

Example

ID: AUTH-002

Title: Login succeeds for active user with 2FA

Objective: Prove a valid user can sign in with the correct password + OTP.

Preconditions/Dependencies: Staging env; user jane@example.com ACTIVE; 2FA=ON; OTP service reachable

Steps:

1) Enter email/password → Expect “Enter 6-digit code”

2) Enter valid OTP → Expect dashboard loads with greeting “Hi, Jane”

Evidence: Screenshot on success; auth logs ref

Pro-Tips:

- Define the objective in one sentence. If it needs more than one sentence, the test might need to be split.

- Ensure that expected results are observable and specific in terms of values, messages and system states.

- Understand the owner of each dependency. For eg., “QA seeds users,” “Ops owns flags”.

- Tag tests appropriately (@p0, @auth, @manual/@automated) so they are identifiable and traceable.

Integrate Automation Frameworks and Test Cases

Seamless, result-driven, best practice-imbued automation only works when your test automation framework and test management tool “talk” to each other.

A few guidelines on how to achieve this at the foundational levels:

- Use the same test case ID for the tool and the script name, tag or metadata.

- Configure the system to attach evidence (logs, screenshots, traces) on every failure.

- Use the same tags in code and in the tool.

- Store the repo path (or test ID) in the case record to connect every failing case to its exact spec.

Pro Tips: Organizing Your Tests for Automation vs Manual Testing

Take tags seriously. Keep them few, clear, and reusable. A few examples:

- Type:

@manual,@automated - Layer:

@unit,@api,@ui,@perf,@security - Platform:

@web,@ios,@android,@desktop - Risk/Priority:

@p0,@p1,@p2 - Lifecycle/Intent

@smoke,@regression,@canary,@release-blocker - Domain/Feature:

@auth,@checkout,@search,@billing, etc.

● Create a glossary listing all tags in a project, with definitions and examples.

● Group tests by type (manual vs. automated).

● Maintain separate views with shared taxonomy. Keep one project with multiple tags.

● Keep it to ~5–8 tags per test; enforce via review checklist.

● Don’t create synonyms. For eg., @payment vs. @payments . Pick one.

● Version risky tags if rules change. For eg.,@p0.v2 during transition.

● Don’t tag feelings (“@maybe-flaky”). Use @flaky + owner + fix-by date.

Pro-Tip: Prioritizing Tests

- Score tests with simple RPN = Impact × Likelihood × Detectability.

- Automate high RPN paths first. Focus exploratory tests for areas where bug detectability is low.

- Prioritize the flows with highest % of real-world usage, highest impact on revenue and most severe blast radius.

- Precisely test software segments with frequent code changes, many dependencies, or complex state machines.

- Pre-determine a flakiness limit (e.g., <1%) and pause new test creation until it’s met.

- Rank the assertions that prove business outcomes, rather than brittle UI checks, especially in the early stages.

- Define exit criteria for tests clearly. For example, “100% P0 pass, zero open P0 defects, flake ≤1%, top-N journeys green across key environments”.

Assigning Responsibilities To Keep Projects Productive

Quality scales best when everyone knows exactly what their responsibilities are:

- Developers write and maintain unit and integration tests. They make features testable and fix any flaky tests in their bucket during sprints.

- QA Analysts run exploratory tests. They design manual tests with clear objectives/expected results/dependencies. They can collaborate with SDETs to convert stable, high-value cases to automation.

- SDETs manage the framework, CI wiring, and test data builders. They keep test suites reliable (flake triage, retries, self-healing strategies).

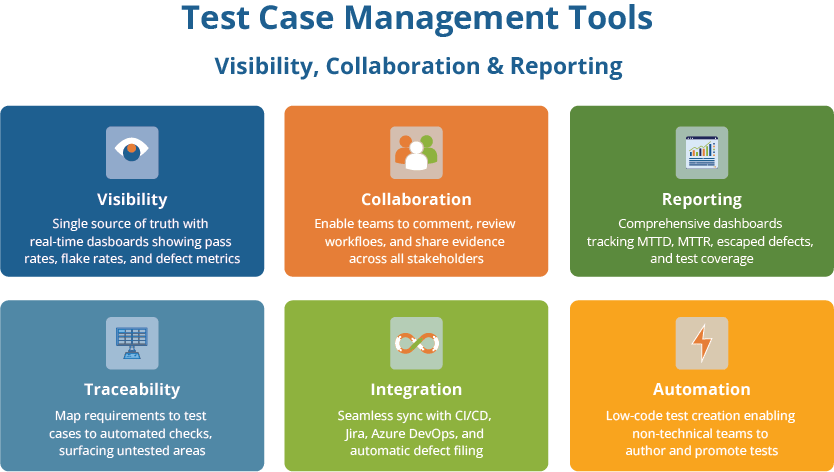

Why Use Test Case management Tools for Visibility, Collaboration, and Reporting?

Test case management requires a bit of science and art. Specialized tools work to provide that balance, and sync with individual workflows that change according to project, team, and software needs.

- Offers a single source of truth. Project members can store manual test cases and link automated test IDs/scripts in one place. This helps all stakeholders see the latest progress and run status in a single view.

- Maps requirements/user stories → test cases → automated checks → defects. This surfaces untested requirements and features with repeated failures.

- The right test management tool comes with dashboards that show P0/P1 pass rates, flake rate, escaped defects, mean time to diagnose (MTTD), and mean time to repair (MTTR).

- Tools allow testers and other stakeholders to leave comments/mentions, review workflows, and evidence attachments like screens, logs, and traces.

- A tool like TestWheel can enforce naming conventions, tagging (e.g., @manual, @automated, @api, @p0), ownership fields, and SLAs for triage.

- Test management tools often integrate and sync with CI/CD results, auto-file defects with artifacts, and notify the right owners via chat. TestWheel, for example, syncs with Jenkins/Jira/Azure DevOps.

- Tools can track all revisions to cases, diff changes, and link them to PRs or change requests.

- Specific tools like TestWheel enable low-code test creation and execution. Even non-SDET teammates can author cases and promote stable ones to automation. The tool can even convert manual test cases into automatically executable steps.

Transition to No Code Testing with TestWheel

With a tool like TestWheel, users can upload existing Selenium scripts or excel-based test cases, which are automatically converted into runnable test steps. It offers a clear, concise, and user-friendly interface that provides quick access to critical information and tools based on the user’s role and permissions.

TestWheel also enables simpler no-code testing by self-healing all test scripts as soon as any UI changes occur. Here’s what to look for if you schedule a demo of TestWheel.

- Enables test creation and execution across UI and APIs, as well as performance tests.

- Users can upload the manual test case, which is written in plain English, directly to the platform. They can also use the TestWheel template for test case creation.

- The uploaded natural language input is processed by the TestWheel’s AI model. It takes the required next actions like navigating to specified URLs, filling out fields, clicking buttons, and verifying elements.

- The AI model extracts UI elements automatically and identifies the required properties to fetch them for validation, in case of UI changes.

- The platform scales to handle all the test executions once testing is complete.

- Reports are generated in PDF and playback video format.

- Seamless integration with popular tools like Azure DevOps, Jira, and Jenkins.

- Link test results directly to user stories or tickets in Jira.

- Sync test execution data across development, QA, and DevOps environments.

- Support for REST APIs and webhooks to connect with other platforms.