- September 11, 2025

No-code software testing uses AI to author large parts of test cases, taking away much of the human effort it takes to code automated open source tests from scratch. Humans still have to provide context and feedback to AI models and their test cases(custom functions, data setup, complex assertions), but AI takes over most of the grunt work.

Unsurprisingly, No-code, AI-powered testing is gaining traction. It enables faster, model‑driven test design that even non-coder team members can contribute to. This is key to keeping pace with shorter release cadences as well as systems with far too many moving parts — SPA front‑ends, mobile clients, APIs, background jobs.

At the same time, many teams feel stuck with the status quo of manual testing despite wanting to make the transition to No-code software testing. They have to worry about making the technical shift while also meeting the next deadline, managing re-training, and worrying about new forms of flakiness emerging in the pipeline.

That’s where this article can help. It will lay out a pragmatic path for adopting no-code software testing into your organization without delaying delivery. You’ll get some guidance on how to evaluate your team’s fitness for No code and AI ops, total cost, how to communicate the benefits of transitioning to test automation to stakeholders, align with shift‑left and CI/CD practices, and use AI only where it genuinely helps.

Table of Contents

Is No‑Code Test Automation Right for Your Team?

When deciding on this question, ask yourself the following:

- How often do you ship? If your team runs on a weekly release cadence (or even faster), tests must be created fast without draining maintenance efforts. No code testing can help.

- What tools must the AI No-code test agent integrate with? Inventory all CI/CD, test case managers, bug trackers, and pipelines. The right platform should easily integrate with your existing stack.

- What is the skill mix in your team? Are testers experienced with exploratory tests and domain knowledge, but not as much with coding? A No-code AI agent is perfect for magnifying their contribution.

- What app surfaces should the No-code testing tool be able to test — web, APIs, mobile? Should it be able to run performance/load tests?

- Catalogue the organization’s standards for governance and security — SSO/RBAC, audit, encryption, and data masking. Does the platform you are looking to adopt meet all compliance requirements?

Consider this Quick Cost-Benefit Frame, With Regard to Engineering Hours

Let’s say a senior SDET spends 8 hours building & stabilizing a UI test in a code framework. It takes them 2 hours in a mature No‑code tool. At 400 stable UI tests/year, that’s 2,400 engineer hours saved.

A no-code software testing tool like TestWheel provides test authoring at a higher velocity, lower maintenance via resilient locators/self‑healing, and built-in reporting. The savings in engineering effort and time add up quickly.

How to Communicate Benefits of No-Code Testing to Stakeholders

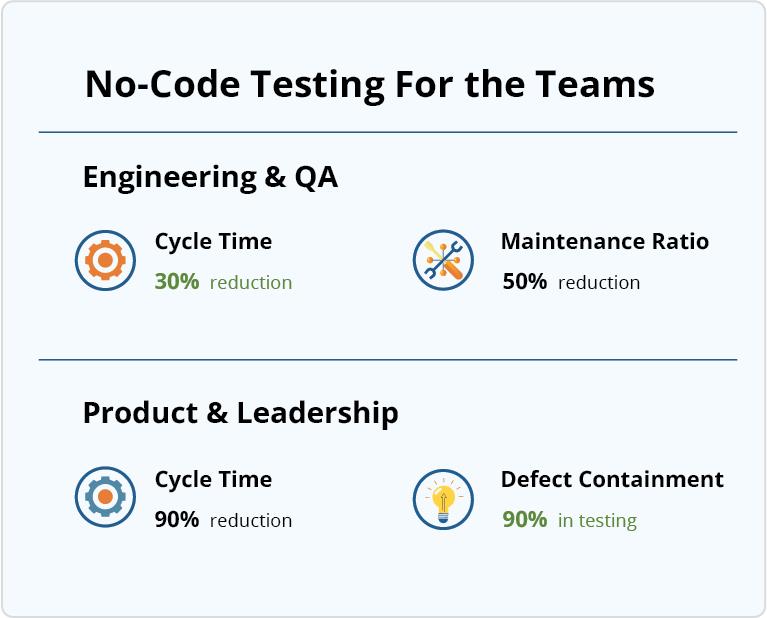

Different stakeholders pay attention to different metrics. Here are a few guidelines on how to translate the benefits of No code testing to different teams.

Engineering & QA

- Calculate throughput as median hours from test idea → PR merged → first green run on main pipeline. Track the number of tests/week/contributor and the % of steps reused across suites. When you run the same suite on a No-code tool, it will showcase the time saved as testers no longer have to manage driver setup, waits, locators, and reporting. You’ll be able to prove a concrete delta on a fixed set of variables.

- Showcase test stability clearly. Set a benchmark for minimum acceptable flakiness (e.g., <2% non‑deterministic failures/week). Then run tests on No-code toolsets and demonstrate how self-healing works in action — automatically adjusting selectors, visual diffs, and test results with every UI change.

- Benchmark the amount of QA time spent on coding, including framework setup, retries, report generation, and grid management. The right tool will shrink the time considerably, leaving QA more time to run negative paths, boundary assertions, and stateful API sequences.

For Product & Leadership

- Demonstrate how No-code testing platforms like TestWheel actively accelerate time to release without compromising software quality. Fewer flaky tests and faster test creation reduce hand-off delays.

- Showcase the reduction in maintenance burden. Track the QA maintenance ratio (hours maintaining automation / total QA hours). Then run suites on the no-code tool in order to cap it at a predetermined percentage, ≤25%, for example. This keeps sprints mostly predictable.

- Measure defect containment (pre‑prod defects / total defects) and escaped‑defect rate (measured per release). No-code, AI-driven testing can shift-left API checks and self-heal UI flows to increase defect containment and reduce escaped defect rate.

- Measure the hours that testers previously spent authoring, running, and reporting on tests. A platform like TestWheel will cut this time by a visible % and simplify governance with SSO/RBAC, audit trails, and the like.

DO NOT FALL FOR HYPE. Do the math, hour-by-hour, before and after tests. Run a phased rollout with specific exit criteria. Lead with numbers that leadership actually cares about — cycle time, escaped‑defect rate, and maintenance ratio.

Strategies for a Smooth Transition to No-Code Software Testing

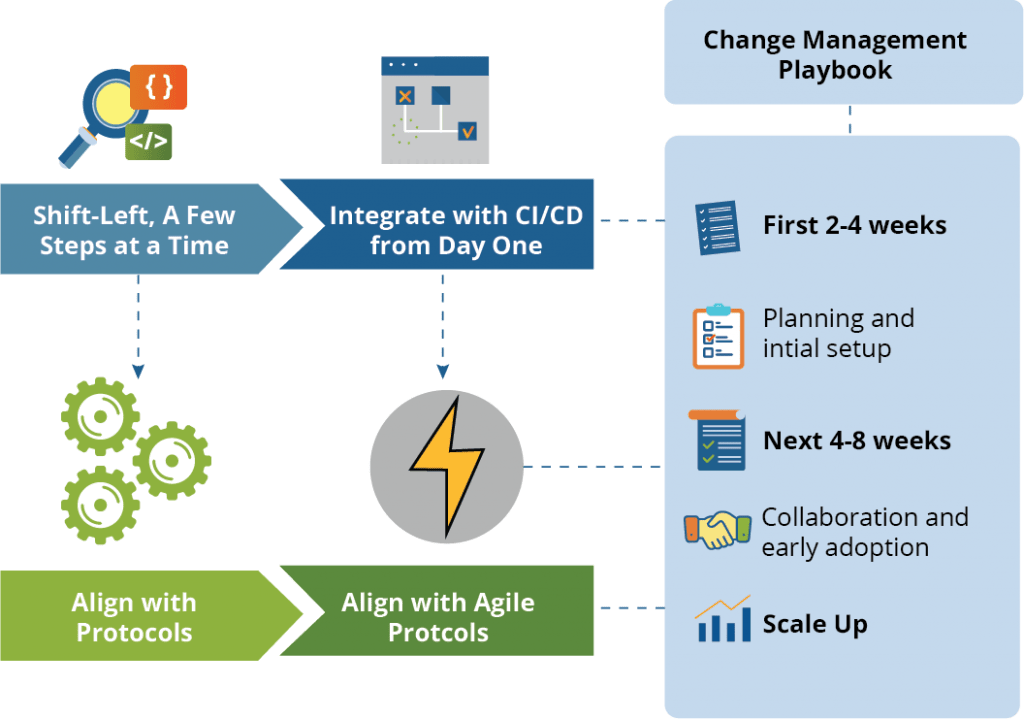

When shifting to a No-code test pipeline, be sure to balance all technical guardrails with core-level change management. Consider the following operational sequence to introduce No-code pragmatism to your stack.

Shift‑Left, A Few Steps at A Time

- Pick the first ten journeys to run on no code testing framework. Assign a score to each: Reach × Impact × Churn — how many end-users check the feature, business risk if it fails, and how often the UI/logic changes.

- Build reusable blocks for auth, navigation, search, checkout, payments, notifications, and data setup/teardown. In new test cases, you just have to change the parameters.

- For each test, check if any steps can be validated at the API boundary (status codes, JSON schema, side‑effects). Verify these with API tests. Keep extensive UI checks for user-facing elements (layout, accessibility, critical selectors).

Integrate with CI/CD from Day One

Shape your pipeline roughly around the following:

- On PR: fast targeted suites (tags: @smoke, @changed-areas) with test‑impact analysis. Nothing more than 10 minutes.

- On merge to main: full smoke tests as well as testing critical paths across one browser/device combination.

- Nightly/cron: full regression matrix + API tests + performance smoke.

- Configure a single re-run for each test (if needed). Collect screenshots/video/logs.

- Quarantine tests that are known to be flaky with a @quarantine tag.

- Parallelize tests by tag or average time taken to execute.

- Keep nightly regression up to 30 minutes.

- Publish JUnit/Trx, videos, screenshots, and network logs per test. Auto‑attach these to bug tickets so devs can find root causes immediately.

Align with Agile Protocols

- Take previously set acceptance criteria into verification steps for the No-code platform. Build the test while defining the user story. Keep IDs of before-and-after tests linked for traceability.

- Re-plan sprint durations, according to the capacity devs can take with their new low-code capabilities.

- Test the feature. Collect evidence of it passing automated checks within shorter windows and with less dev/QA effort.

Run Integrations that Reduce Friction

- Integrate the no-code tool with Jira or Azure DevOps. Link test runs to user stories and bugs. If a test fails, the engine should automatically log a ticket with reproducible steps, environment data, screenshots, and videos.

- Pass/fail results should be posted to specific channels (depending on team needs) with attached evidence.

- Define roles for SSO & RBACL: Author (creates/edits tests), Reviewer (approves changes), Operator (runs the tests), Admin (manages integrations/governance).

- Enforce least privilege; enable audit logs.

- Tag tests with domain owners; failed runs should mention the right team automatically.

Change Management Playbook

First 2–4 Weeks:

- A single squad.

- 30-40 tests across UI and API.

- Success criteria: <2% flake rate, smoke under 10 minutes, nightly regression under 30 minutes.

- 80% of failures should be diagnosable from the evidence alone.

- Schedule tests in office hours.

- Match an SDET with a manual tester to help the latter upgrade their skills.

Next 4–8 Weeks

- Standardize folder structure, naming, tags, and data categories.

- Create a quarantine policy, locator conventions (data‑test‑id), and SLAs for PR checks.

- Onboard a second squad with a second set of tests.

Scale Up:

- Run parallel tests across projects.

- Set baseline performance checks as part of regression suites.

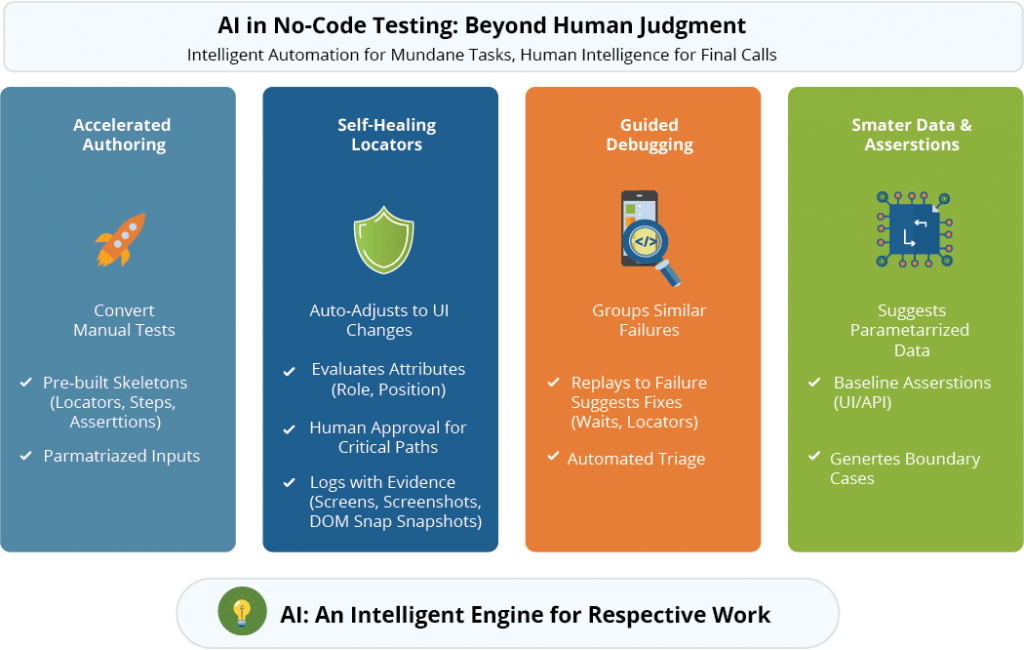

Where Does AI truly help in No‑Code Testing?

AI contributes most potently to the elimination of repetitive work that does not require human intelligence. It cannot replace human judgment. It is best treated as an extremely intelligent engine that can take mundane but necessary tasks off testers’ hands while letting them make final calls.

Accelerated Authoring

AI agents can convert manual test cases into fully executable automated tests with no human assistance. With No-code solutions like TestWheel, QAs can start with a pre-constructed skeleton including locators, basic steps, and assertions.

Self‑Healing of Locators

Self-healing is not magic. When a primary selector fails due to changes in the UI script, the AI agent considers alternatives (attributes, role/name, relative position) to find an updated alternative.

Maintain a set of “allowed” attributes. Ban fragile ones like dynamic IDs. Configure human approval before healing tests for critical paths.

Every healing should be logged with evidence like screenshots, DOM snapshots, selector maps of the change, etc.

Guided Debugging

AI agents can help with triage by:

- grouping similar failures across test runs and suggesting possible causes.

- replaying tests to find the first failing assertion.

- recommending possible fixes such as updating locators, adding waits, switching to a more stable attribute.

Smarter Data and Assertions

AI engines can suggest smarter parameterized inputs and baseline assertions after studying UI semantics or previous API responses. For instance, it can recommend schema checks for APIs and role/label-based assertions for UI. It can also generate boundary cases if needed and monitor drifts from baseline values.

Important Note:

It’s important to set realistic expectations when it comes to No-code, AI-driven test automation.

Yes, there will be some maintenance.

Yes, it will take time to refactor brittle flows, curate test data, and review selectors periodically.

Just a lot less than with a code-first stack.

No, AI won’t choose test oracles. It will only suggest assertions.

No, it’s not a good idea to use self-healing as a blanket tactic. It is best to gatekeep changes with human approval for critical flows.

No, AI will never replace human testers.

The key is to underline that humans stay in control. AI creates/edits tests; reviewers accept with context. Treat AI edits like code: keep history, allow one‑click reverts, and always link to the relevant test run.

Why TestWheel Should Be Your Gateway into No-Code Software Testing

TestWheel covers everything to initiate No-code QA protocols without having to rip up existing workflows. It covers web, API, mobile, and performance tests, bringing CI/CD hooks, in-built test management & reporting, and AI-powered self-healing + test creation to the table.

- Enables test creation and execution across UI and APIs, as well as performance tests.

- Users can upload their manual test case written in plain English, and AI will convert it into an automated self-healing template.

- The uploaded natural language input is processed by the TestWheel’s AI model. It takes the required next actions like navigating to specified URLs, filling out fields, clicking buttons, and verifying elements.

- In case of UI changes, the AI model extracts UI elements automatically and identifies the required properties to fetch them for validation.

- The platform scales to handle all the test executions once testing is complete.

- Reports are generated in PDF and playback video format.

- Seamless integration with popular tools like Azure DevOps, Jira, and Jenkins.

- Link test results directly to user stories or tickets in Jira.

- Sync test execution data across development, QA, and DevOps environments.

- Support for REST APIs and webhooks to connect with other platforms.

- Active support from TestWheel technical analysts to train teams and smoothly transition.

TestWheel was originally built to meet the strict security and compliance standards of the U.S. Department of Defense, with native support for Controlled Unclassified Information (CUI) and mission-critical software development. It has passed rigorous vulnerability testing and is Iron Bank-listed, placing it among the DoD’s trusted, hardened software solutions.

The platform meets the high cybersecurity demands of regulated industries like banking, finance, insurance, and healthcare. It offers data masking, encryption, and full test lifecycle protection to deliver a secure, enterprise-grade foundation for no-code test automation.